Imagine teaching a child to recognize animals. Once they’ve learned what a cat looks like, they don’t need to start from scratch to recognize a tiger. They transfer what they already know—fur, whiskers, shape—and adapt. That's exactly how transfer learning works in artificial intelligence. Instead of training a model from zero, it builds on prior knowledge, saving time and improving accuracy.

This approach powers everything from medical diagnostics to language models, making AI smarter with less data. In an information-overloaded world, transfer learning enables AI to learn quicker, adjust more effectively, and perform smarter—taking us closer to real intelligence.

How Transfer Learning Works?

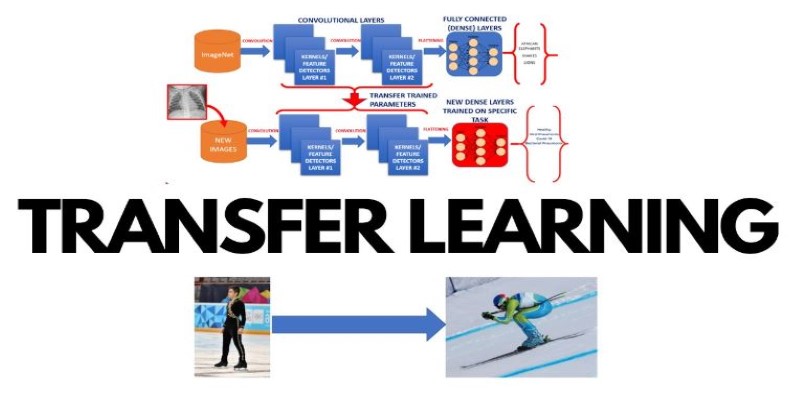

The core idea of transfer learning is simple: instead of training a model entirely from new data, it uses knowledge from a previously trained model and fine-tunes it for a new task. This process saves computational resources and often results in better accuracy because the model already understands fundamental patterns.

The most popular way is to adopt a pre-trained neural network. These models, having been trained on huge datasets such as ImageNet or large text corpora, learn subtle details regarding their respective domains. For example, an image recognition model trained on millions of images knows basic shapes, textures, and structures. When used for a different but related task, such as recognizing medical conditions from X-rays, the model does not have to be learned from scratch. Rather, the existing knowledge enables it to pick up quickly with little new training data.

Fine-tuning is essential in transfer learning. Rather than throwing away the whole pre-trained model, the later layers are tweaked, and the earlier layers keep the learned features. This approach guarantees that the model does not forget the things that it has already learned but learns to suit the particular needs of the new task. Depending on the new dataset's complexity, various levels of fine-tuning can be used. Some instances need minor adjustments, while others require more profound retraining.

Applications of Transfer Learning

Transfer learning is widely used across industries, enabling AI to perform tasks that were once impractical due to limited data availability. One of the most notable applications is in natural language processing (NLP). Large language models, such as GPT and BERT, are pre-trained on massive datasets and then fine-tuned for specific applications like chatbots, document summarization, and sentiment analysis. This approach allows businesses to deploy AI-driven solutions without requiring billions of training examples for each unique task.

In healthcare, transfer learning has become invaluable for diagnosing diseases from medical images. AI models trained on general image datasets can be fine-tuned to detect abnormalities in X-rays, MRIs, and CT scans. Given that medical data is often scarce and expensive to annotate, transfer learning bridges the gap by leveraging existing visual knowledge and applying it to medical diagnostics.

Another area where transfer learning shines is robotics. Training robots from scratch for every new task would be inefficient. Instead, robots can use knowledge from previous tasks to navigate new environments, recognize objects, and perform actions with greater precision. This capability is particularly useful in industrial automation and autonomous vehicles, where adaptability is key.

In the financial sector, fraud detection models benefit from transfer learning by utilizing insights from past transactions to identify fraudulent patterns in new data. Since fraud techniques evolve constantly, AI must adapt quickly. By transferring knowledge from older datasets, these models stay ahead of emerging threats with minimal retraining.

Advantages and Challenges of Transfer Learning

One of the biggest advantages of transfer learning is efficiency. Training deep learning models from scratch demands extensive computational power and vast datasets. By leveraging pre-trained models, AI systems learn faster, cutting down on costs and energy consumption. This makes AI development more accessible, even for businesses and researchers with limited resources.

Another major benefit is improved performance. Since pre-trained models already understand key features of their domain, they generalize better when applied to new tasks. Instead of depending solely on new data, they utilize patterns from broader datasets, leading to more accurate and reliable outcomes.

Transfer learning also addresses data scarcity. Many industries struggle with limited, high-quality datasets. In medical imaging, for example, obtaining labeled data is challenging due to privacy concerns. By using existing knowledge, transfer learning allows AI to function effectively even with small datasets.

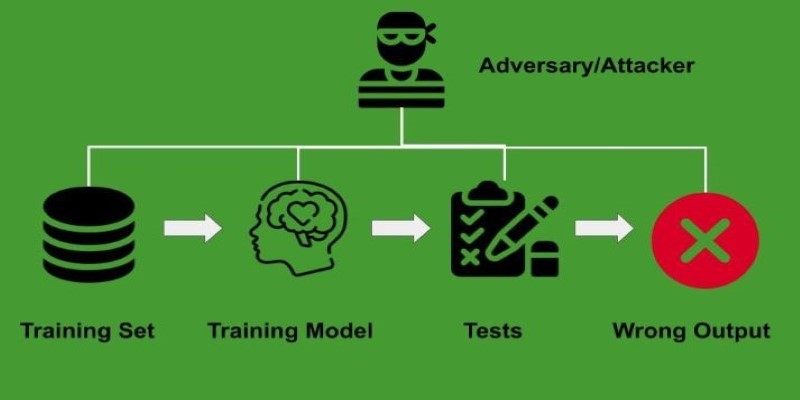

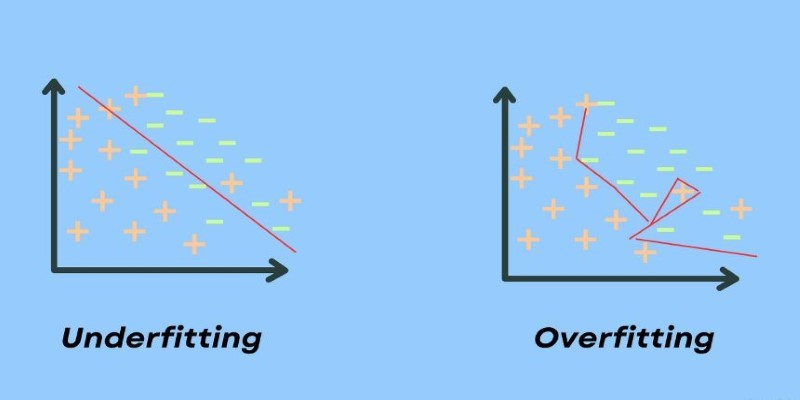

Despite its benefits, transfer learning has challenges. Domain mismatch is a significant issue—if the original dataset differs too much from the new task, adaptation can be difficult. Catastrophic forgetting is another risk, where excessive fine-tuning erases valuable prior knowledge. Additionally, fine-tuning large models still requires powerful hardware and technical expertise, making implementation complex for some organizations.

The Future of Transfer Learning

As AI continues to evolve, transfer learning will remain at the forefront of making models more efficient and versatile. Researchers are focusing on enhancing adaptability across diverse domains, minimizing the need for extensive fine-tuning. One promising direction is meta-learning, where models learn how to learn, improving their ability to generalize across different tasks.

Another breakthrough is self-supervised learning, where AI models are trained on massive amounts of unlabeled data before fine-tuning on smaller labeled datasets. This reduces dependence on manual annotation, making AI more scalable and accessible.

Beyond traditional AI applications, transfer learning is expanding into creative fields such as music generation, video editing, and design. AI models can now transfer artistic styles, understand user preferences, and even enhance human creativity. When combined with generative models, transfer learning could lead to more personalized and intelligent content creation.

Despite its challenges, transfer learning is shaping the future of AI by making it more efficient, adaptable, and resource-friendly. As technology progresses, it will continue to redefine what AI can achieve across various industries.

Conclusion

Transfer learning is transforming AI by enabling models to apply pre-existing knowledge to new tasks, making learning faster and more efficient. By reducing computational demands and overcoming data limitations, AI's practicality across industries is enhanced. As advancements like meta-learning and self-supervised learning progress, transfer learning will drive innovation in healthcare, finance, robotics, and creative fields. Ultimately, it will bridge the gap between human intelligence and machine learning, pushing AI toward greater adaptability and problem-solving capabilities.