Artificial intelligence is changing the way the world works, and deep learning sits at the center of that transformation. At its core, deep learning is a branch of machine learning that mimics the way the human brain processes information. Unlike traditional algorithms that rely on explicit programming, deep learning algorithms learn patterns from massive amounts of data, improving over time without human intervention.

This ability to recognize patterns, make predictions, and refine decisions has made deep learning an essential part of everything from speech recognition to medical diagnostics. But how do these algorithms actually work, and what makes them different from other machine learning models?

How Deep Learning Algorithms Work?

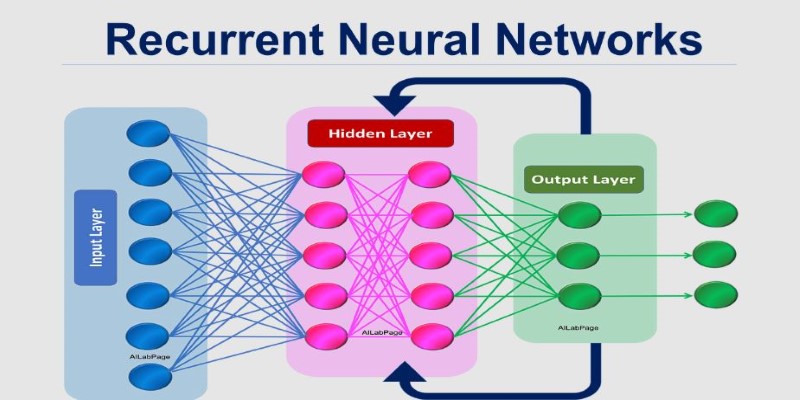

Deep learning is constructed on artificial neural networks, which are modeled on the human brain. Artificial neural networks are composed of several layers, each playing a different function in the learning process. The first layer is known as the input layer and receives raw data—images, voice files, or numbers. The data is carried through various hidden layers, each of which filters information before delivering it to the final output layer, where a conclusion is made.

Each node connecting layers also has a weight, which means one neuron may have a heavy or light effect on another depending on the weighting. The learning algorithm tweaks the weights during training to reduce discrepancies. This phase is called backpropagation since the model iteratively refines its internal working through it. The more levels there are to a network, the more involved interrelations the network can perceive. This is where the term deep learning comes in, nicknamed "deep neural networks," which are the number of layers that create more in-depth analysis.

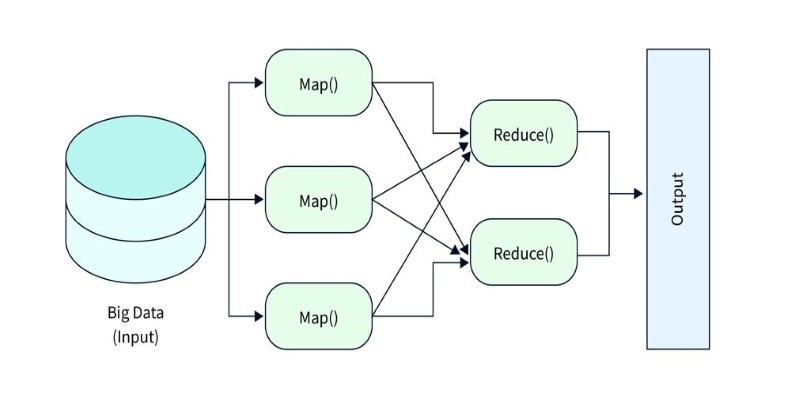

Training a deep learning model takes huge amounts of data and computational resources. As deep learning algorithms depend upon pattern recognition, they have to go through thousands or even millions of examples before they are capable of making good predictions. Therefore, deep learning models spend a lot of money on high-performance GPUs and cloud computing to train them effectively. However, once trained, deep learning models can make incredible real-time predictions.

Types of Deep Learning Algorithms

There are many different types of deep learning algorithms, each geared towards a specific task. Convolutional Neural Networks (CNNs) are mostly applied for image processing. CNNs traverse images, searching for patterns such as edges, colors, and textures to classify objects or find anomalies. For this reason, CNNs are widely employed in facial recognition, medical imaging, and even in self-driving car vision systems.

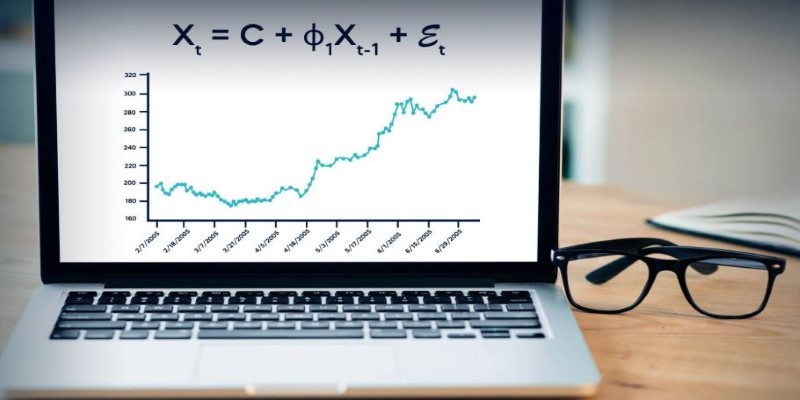

Recurrent Neural Networks (RNNs) specialize in processing sequential data by retaining past information. This makes them ideal for tasks like speech recognition, language translation, and stock market predictions. Their memory-like capability enables them to predict the next word in a sentence or the next note in a musical composition.

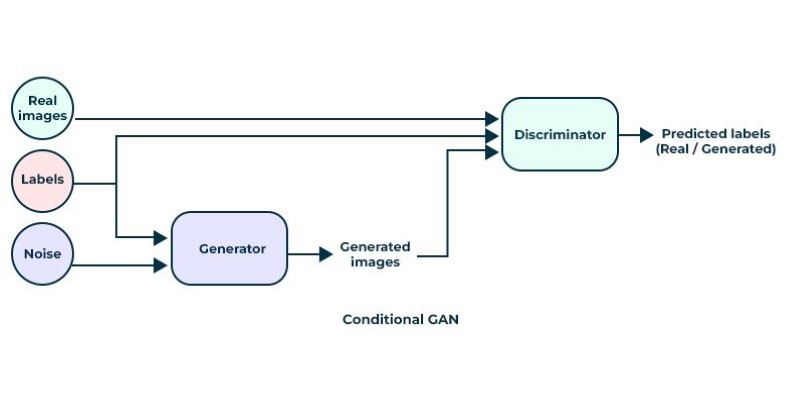

Then, there are Generative Adversarial Networks (GANs), which consist of two competing neural networks. One generates new data, while the other evaluates whether the data is real or fake. This rivalry results in incredibly realistic images, videos, and even voice synthesis. GANs have been used in everything from creating deepfake videos to enhancing old photographs with stunning detail.

Transformers are another breakthrough in deep learning. Unlike RNNs, transformers process all input data at once, allowing them to handle large-scale language models like ChatGPT. This parallel processing makes them efficient and powerful for natural language processing tasks, including text generation, question-answering, and even creative writing.

Each of these deep learning models has unique strengths and limitations, but together, they form the backbone of modern artificial intelligence.

Applications of Deep Learning Algorithms

Deep learning algorithms are transforming industries by automating complex tasks and improving decision-making. One of the most significant applications is in healthcare, where AI-powered diagnostic tools analyze medical scans to detect diseases like cancer earlier and with greater accuracy. These systems assist doctors in providing more effective treatments, ultimately improving patient outcomes.

In finance, deep learning enhances fraud detection by identifying unusual spending patterns in real-time. Automated trading platforms also leverage these algorithms to predict market trends and optimize investment strategies, increasing efficiency while minimizing risk.

The automotive sector is seeing a major shift with self-driving technology. Deep learning enables autonomous vehicles to process sensor data, recognize traffic signals, and detect pedestrians, making real-time driving decisions that improve road safety.

Entertainment is another industry reshaped by deep learning. Streaming services analyze user preferences to recommend content, while AI-powered tools generate music, edit videos, and even create digital actors for films and games.

However, deep learning presents challenges, including data requirements, transparency issues, and bias. Since AI models learn from historical data, they can unintentionally reinforce discrimination. Researchers are actively addressing these concerns to ensure AI remains ethical and beneficial for all industries.

The Future of Deep Learning

Deep learning has already revolutionized industries, but its full potential is still unfolding. With advancements in computing power and data availability, AI models will become more intelligent, capable of reasoning, and solving complex problems with minimal human input.

A promising direction is the fusion of deep learning with reinforcement learning, which would allow AI to learn through trial and error like humans. This could lead to robots that acquire new skills without extensive pre-labeled data. Another breakthrough is neuromorphic computing, which mimics the brain’s processing, making AI models faster and more efficient.

Despite challenges like data dependency and ethical concerns, deep learning’s impact will only grow. From healthcare to self-driving cars, it will continue to drive innovation, making AI more accessible and transformative. As research progresses, deep learning algorithms will become more sophisticated, reshaping industries and everyday life in ways we are just beginning to imagine.

Conclusion

Deep learning algorithms have revolutionized technology, enabling AI to recognize patterns, predict outcomes, and solve problems with minimal human input. From healthcare to self-driving cars, these neural networks enhance efficiency and decision-making across industries. However, challenges like data demands, transparency, and bias remain. As AI research progresses, deep learning will become even more advanced, pushing the boundaries of machine intelligence. The journey is far from over, and its impact will only continue to expand in the years ahead.