Predicting the future has always fascinated humanity, but machines have their way of doing it—through autoregressive models. These AI-driven systems rely on past data to make educated guesses about what comes next, whether in language, finance, or weather forecasting. Instead of seeing information as isolated points, they detect patterns over time, allowing them to generate text, predict stock prices, or even compose music.

Unlike humans, they don’t "think" but follow structured statistical rules to make predictions. Their power lies in their ability to recognize trends and extend them logically, making them indispensable in modern artificial intelligence applications.

How Autoregressive Models Work?

The defining characteristic of an autoregressive model is that each output depends on past values. The term "autoregressive" itself suggests self-referential behavior—each new prediction is derived from previously observed data points. Mathematically, these models are expressed as linear equations where the next value in a series is computed using a weighted sum of past values plus some level of randomness. The randomness accounts for uncertainty and external influences that might not be captured by historical data alone.

To make this concrete, think about a straightforward case where a forecast model predicts tomorrow's temperature depending on past days. If, for instance, last week's temperatures were 30°C, 31°C, and 29°C, then an autoregressive model will look at those values, look for patterns, and make predictions for the next number in the series. The more information it has, the more accurate predictions it will be able to make, particularly for identifying patterns such as seasonal fluctuation or changes.

In natural language processing, autoregressive models function in the same way. In text generation, the model forecasts the subsequent word depending on past words. The method drives most AI-powered chatbots, autocomplete tools and language models. The models learn word contexts, syntax, and even semantics through large amounts of text data. They don't think human-style but produce meaningful sequences by applying statistical patterns in language.

One of the most well-known examples is OpenAI's GPT series, where every new word is chosen based on the likelihood of following the preceding text. Each word influences the next, creating sentences that appear natural and well-structured. Despite their impressive capabilities, autoregressive models have limitations, including biases in training data and errors accumulating over long sequences. However, their ability to generate human-like responses remains a major achievement in AI research.

Applications of Autoregressive Models

Autoregressive models extend far beyond simple text prediction. They play a critical role in various industries, making them indispensable in fields that rely on pattern recognition and forecasting. Financial institutions, for example, use them to predict stock market movements. By analyzing past price fluctuations, these models attempt to forecast future trends, helping investors make informed decisions. While no model can predict market behavior with absolute certainty, autoregressive approaches improve decision-making by highlighting possible scenarios.

In climate science, autoregressive models help predict weather patterns and long-term climate changes. By feeding historical temperature, pressure, and humidity data into the model, meteorologists can generate forecasts with greater accuracy. These predictions contribute to early warnings for storms, droughts, and other extreme weather events, allowing communities to prepare in advance.

Speech recognition is another domain where autoregressive models excel. Virtual assistants like Siri and Google Assistant use them to interpret spoken words and generate responses. When someone speaks into a device, the model processes the audio signal as a sequence and predicts the most likely transcription based on past phonetic patterns. This ability has revolutionized voice-controlled technology, making interactions with machines more seamless.

Music generation also benefits from autoregressive modeling. AI-driven music composition tools analyze previous notes and rhythms to predict what should come next. By training on vast datasets of musical compositions, these models can create original pieces that mimic the style of classical composers or contemporary artists. While they don’t possess creative intent, their ability to generate music based on structured rules makes them valuable tools for musicians and producers.

Challenges and Future of Autoregressive Models

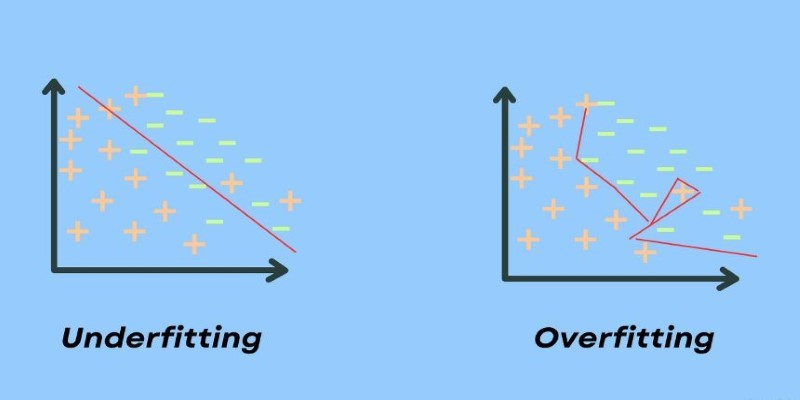

Despite their strengths, autoregressive models are not without challenges. One major drawback is error accumulation. Since each prediction relies on previous outputs, a single mistake can snowball, leading to increasingly inaccurate results. This is especially problematic in long-form text generation or extended forecasting, where minor miscalculations can create significant deviations over time.

Another issue is computational efficiency. Autoregressive models process sequences step by step, which can be slow when dealing with large datasets or real-time applications. Generating text, for example, requires predicting one word at a time, making it difficult to scale for high-speed applications. Researchers are exploring ways to mitigate these inefficiencies through techniques like parallelization and hybrid architectures.

Bias in training data is another concern. Since autoregressive models learn from existing datasets, any biases present in the data can be reflected in their outputs. If a model is trained on biased text, it may generate biased responses, reinforcing stereotypes or misinformation. Addressing this requires careful curation of training data and ongoing monitoring of model behavior.

Looking ahead, autoregressive models will continue to evolve, particularly in AI-driven creativity and decision-making. Hybrid approaches, combining autoregression with other deep learning techniques, show promise in improving efficiency and accuracy. Additionally, advancements in reinforcement learning and self-supervised training could reduce error accumulation and improve long-term coherence.

As AI systems become more sophisticated, autoregressive models will remain at the core of many breakthroughs. Whether in finance, healthcare, entertainment, or communication, their ability to analyze sequences and generate predictions makes them an essential tool in artificial intelligence. While challenges remain, ongoing research and innovation will push the boundaries of what these models can achieve.

Conclusion

Autoregressive models have become a cornerstone of artificial intelligence, allowing machines to predict, generate, and analyze sequential data with impressive accuracy. From forecasting market trends to generating human-like text, their impact spans industries. While powerful, they aren’t without flaws—errors can compound, and biases in training data remain a challenge. Yet, ongoing advancements in AI are improving their reliability and efficiency. As technology evolves, these models will continue shaping how we interact with data and automation. Their ability to recognize patterns and anticipate future outcomes ensures they remain an essential tool in AI-driven decision-making and creative applications.