Cluster analysis is a key technique in data science, helping uncover patterns and relationships within datasets. It plays a crucial role in market segmentation, anomaly detection, and genetics. R, a powerful statistical computing language, offers robust tools for efficient clustering. By grouping similar data points, clustering enhances decision-making in various fields, from customer analytics to medical research.

Whether examining social trends or business metrics, using cluster analysis in R yields valuable information. Using the proper techniques, like k-means or hierarchical clustering, raw data can be converted into useful patterns, leading to smarter strategies and greater insight.

Understanding Cluster Analysis

Cluster analysis is the process of classifying similar points of data according to certain characteristics. In contrast to classification, where pre-existing labels are applied, clustering is an unsupervised method that identifies the natural groupings in the data. Such a method is beneficial when attempting to find underlying structures without a prior understanding of pre-existing categories.

The most common clustering techniques include hierarchical clustering, k-means clustering, and density-based clustering. Each of them is strong in some manner and is selected depending on the data type and analysis goal. K-means clustering, for example, performs well when there are known numbers of clusters, whereas hierarchical clustering can present more flexibility in uncovering group relationships. Density-based techniques such as DBSCAN excel in detecting clusters of varying shapes and sizes.

Cluster analysis involves the appropriate choice of similarity measures. Metrics such as Euclidean distance, Manhattan distance, or cosine similarity are primarily responsible for specifying how data points are grouped together. The quality of the cluster highly depends upon the appropriate selection of the metric. Preprocessing data, normalization, and scaling guarantee that the clustering is unbiased due to differing scales of numeric features.

Preparing Data for Cluster Analysis

Dataset preparation is necessary prior to cluster analysis in R. Raw data contains noise, missing values, or features in varying scales, which will skew clustering results. R offers various packages like dplyr, tidyverse, and cluster to clean and preprocess data effectively.

The first step is loading a dataset. Data can be imported into R using the read.csv() function. Handling missing values involves strategies like mean imputation or removing rows with too many missing entries. Once the dataset is cleaned, standardization ensures that variables with larger numerical ranges do not dominate the clustering algorithm. This is often done using the scale() function in R.

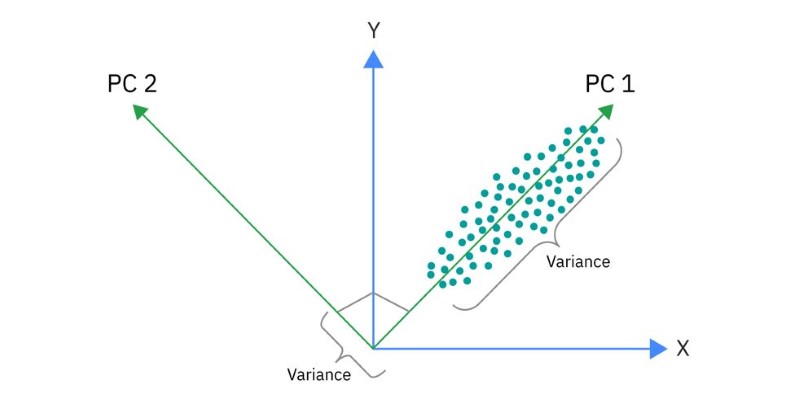

Principal Component Analysis (PCA) can also reduce dimensionality before clustering, helping improve performance and visualization. When working with high-dimensional data, PCA can extract the most significant features while reducing computation time. The prompt () function in R simplifies this process, making it easier to handle datasets with many variables.

Implementing Cluster Analysis in R

The choice of algorithm for performing cluster analysis in R depends on the nature of the dataset. K-means clustering is one of the most widely used methods due to its efficiency and simplicity. The kmeans() function in R performs this task by partitioning the data into a specified number of clusters. Choosing the correct number of clusters is crucial, often determined using the elbow method. This involves plotting the total within-cluster variation against the number of clusters and selecting the point where the reduction in variation slows down. The fviz_nbclust() function from the factoextra package provides a visual way to find the optimal cluster number.

Another popular approach is hierarchical clustering, which does not require specifying the number of clusters beforehand. Instead, it builds a tree-like structure, known as a dendrogram, to represent relationships among data points. The hclust() function in R is used for hierarchical clustering, and different linkage methods, like complete, single, and average linkage, affect the final cluster structure. Once clustering is completed, cutree() is used to extract the desired number of clusters.

DBSCAN is a preferred choice for datasets with noise or varying densities. Unlike k-means or hierarchical clustering, DBSCAN does not require specifying the number of clusters in advance. Instead, it uses a density-based approach to identify clusters. The dbscan() function from the dbscan package in R is used for this method. DBSCAN works well in identifying clusters of different shapes but requires a careful selection of parameters like eps, which controls neighborhood size.

Once clustering is complete, evaluating cluster quality is essential. Silhouette analysis measures how well data points fit within their assigned clusters. The silhouette() function in R helps assess the effectiveness of clustering. A higher silhouette score indicates well-defined clusters, while lower scores suggest overlapping or poorly separated groups.

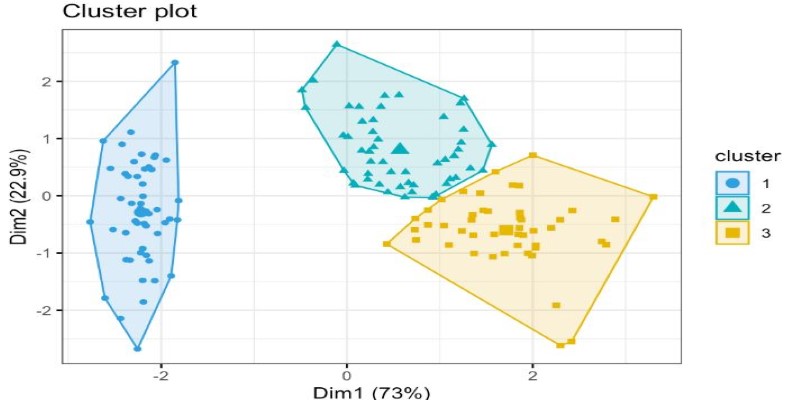

Interpreting and Visualizing Clusters

After performing clustering, understanding the results through visualization is crucial. R provides several tools for visualizing clusters. Scatter plots using ggplot2 can display clustered data in two-dimensional space. For datasets with more than two variables, factoextra and ggplot2 help create PCA-based visualizations to better interpret cluster structures.

Heatmaps offer another way to observe clustering results, particularly for hierarchical clustering. The heatmap() function in R provides an intuitive representation of how data points relate within clusters. Cluster centers and distributions can also be analyzed using box plots to understand variations within each group.

For business or research applications, interpreting clusters involves identifying common characteristics among grouped data points. In customer segmentation, for example, clusters may reveal purchasing behaviors or preferences. In healthcare, clustering can help identify patient groups with similar medical conditions, aiding in targeted treatments.

Conclusion

Making sense of complex data is never easy, but cluster analysis in R simplifies the process by identifying natural groupings. Whether using k-means for quick segmentation, hierarchical clustering for deeper insights, or DBSCAN for handling noisy data, the right approach depends on the dataset’s structure. Proper preprocessing and careful evaluation ensure that clusters are meaningful and useful. Visualization techniques like scatter plots and heatmaps bring clarity to the results, making analysis more intuitive. With R’s robust clustering tools, anyone dealing with data—from businesses to researchers—can extract valuable insights, leading to smarter decisions and a clearer understanding of patterns.