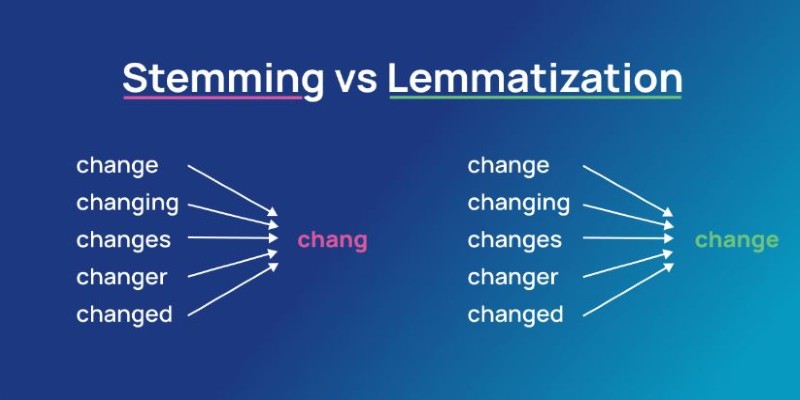

Language is messy. People use different forms of the same word depending on context, grammar, or even personal style. For machines, this variability creates a challenge—how can they understand that "running" and "ran" mean the same thing? That’s where lemmatization and stemming come in. These two NLP techniques break words down to their base form, helping computers process language efficiently.

Though they both have a common objective, they go about it in vastly different ways. Stemming is fast but coarse, cutting down words indiscriminately. Lemmatization is more careful, aligning words with correct dictionary forms. Recognizing their distinction is important for improved language processing.

What is Stemming?

Stemming is the simplest of the two methods. It is a process of cutting affixes (prefixes and suffixes) off a word to get to its root form. Stemming uses rules established in advance to reduce a word back to its most basic form, even without checking if the outcome is a valid word. The word "running" may be stemmed to "run" by cutting off "-ing," but it would also incorrectly cut "better" down to "bet," which is not the intended root form.

The most popular stemming algorithm is the Porter Stemmer, first presented by Martin Porter in 1980. It reduces words using a sequence of rules in stages. There is also the Lancaster Stemmer, which is even more invasive and tends to over-stem words so much that they lose their readability. Another innovative approach is the Snowball Stemmer, which is an enhanced version of the Porter algorithm and is used for more than one language.

Speed is one of stemming's key strengths. Since it uses rule-based reductions instead of complicated word analysis, it works fast. It is, therefore, particularly useful in situations where high precision is not needed, like in search engines whose purpose is to find a large volume of documents. The only catch is that stemming tends to output words that are not true dictionary words, and this may, at times, lower the level of NLP applications.

What is Lemmatization?

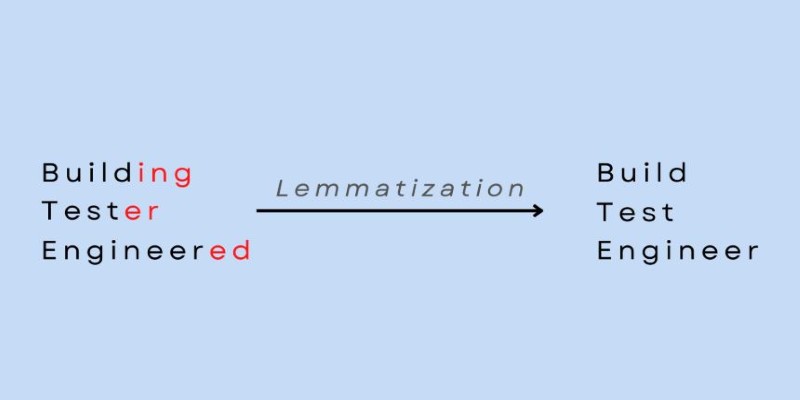

Lemmatization is a more sophisticated process that converts words into their base or dictionary form, known as a lemma. Unlike stemming, it considers the meaning and grammatical role of a word. It relies on linguistic knowledge to ensure that the root word is valid, making it a more precise method. For example, while stemming might reduce "running" to "run," lemmatization will also recognize "better" as a form of "good," which a stemmer cannot do.

To achieve this, lemmatization requires a lexical database like WordNet, which helps determine a word’s lemma based on its part of speech. This extra step makes lemmatization slower than stemming but much more accurate. The additional processing power is often justified in applications where precision is crucial, such as machine translation, chatbot development, and sentiment analysis.

Lemmatization ensures that words are reduced to a standard form that maintains their meaning. For example, "mice" and "mouse" would both be lemmatized to "mouse," whereas a stemmer might not handle this transformation correctly. Similarly, "ran" would be lemmatized to "run," recognizing that both words share the same base meaning.

Key Differences Between Lemmatization and Stemming

The primary distinction between stemming and lemmatization lies in their approach to reducing words. Stemming follows predefined rules to remove affixes without considering the context, which can lead to incorrect word forms. Lemmatization, on the other hand, ensures that words are transformed into their proper dictionary form based on linguistic analysis.

Another key difference is accuracy vs. speed. Stemming is much faster since it follows a simple rule-based approach, making it suitable for large-scale applications like search indexing. Lemmatization, while more resource-intensive, is ideal for applications where accuracy is paramount.

Stemming can sometimes lead to over-stemming (reducing words too aggressively) or under-stemming (not reducing them enough). For example, "troubling" might be stemmed from "trouble," which is not a valid word. Lemmatization avoids this issue by considering context and word meaning, ensuring that reductions produce actual dictionary words.

Stemming is often favored in tasks where generalization is more important than precision. For example, in search engines, stemming helps retrieve more results by grouping different word variations. If someone searches for "running," stemming ensures that "runs," "ran," and "runner" also appear in the results. Lemmatization, however, is useful when preserving meaning, which is crucial, such as in language translation or text summarization tools.

When to Use Stemming and When to Use Lemmatization

Choosing between stemming and lemmatization depends on the specific needs of an NLP task. If processing speed is the priority and minor errors are acceptable, stemming is the better option. It is commonly used in information retrieval systems, where retrieving a broad set of results is more valuable than linguistic accuracy.

However, if an application demands precision, lemmatization is the way to go. Chatbots, grammar checkers, machine learning models, and language analysis tools benefit from lemmatization because it ensures that words retain their correct meanings. Sentiment analysis, for instance, requires understanding words in context—something that a simple stemmer cannot effectively achieve.

Another consideration is language complexity. English has relatively simple morphological rules, so stemming can often be effective. However, in languages with more complex word structures—such as Arabic or Finnish—lemmatization is necessary to handle intricate word forms correctly.

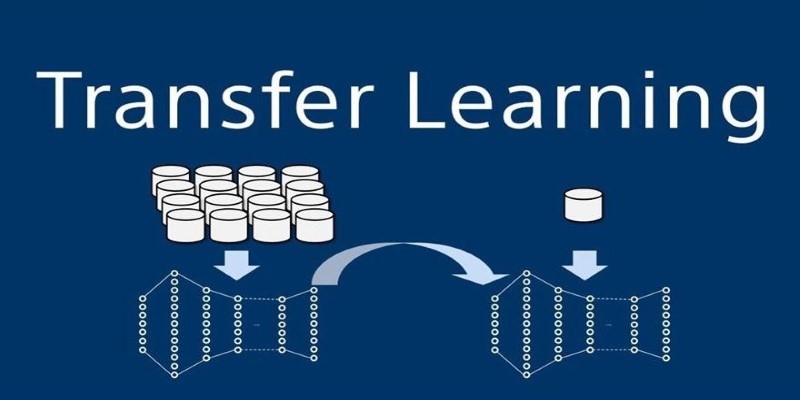

In some cases, combining both methods can yield better results. For example, a system might use stemming for rapid initial processing and then apply lemmatization for fine-tuned adjustments. This hybrid approach balances speed and accuracy, making it useful in areas like spam detection and content categorization.

Conclusion

Stemming and lemmatization are key NLP techniques for reducing words to their base forms. Stemming is faster but less precise, making it ideal for large-scale text processing. Lemmatization ensures accuracy by considering word meaning, which benefits applications like chatbots and sentiment analysis. Choosing between them depends on the balance between speed and precision. In some cases, a hybrid approach works best. As NLP advances, both methods will continue playing a vital role in improving language understanding and machine interactions.